Lately I've been getting into OpenGL since I love looking at awesome animations and visualizations, and want to start making my own. After a few false starts, I've settled on writing these programs in C++. Besides the great performance that C++ has, C++ seems to have the widest array of bindings to different parts of the graphics and math ecosystem. In C++ I have native bindings to all of the usual C libraries, plus great C++ libraries like GLM that are high performance, actively maintained, and tailored for graphics programming. The stuff I'm doing right now isn't stressing the capabilities of my graphics subsystem by any stretch of the imagination, but I have ambitions to do more high performance stuff in the future, and having familiarity with the C++ OpenGL ecosystem will come in handy then.

The downside is that it's kind of hard to share these programs with others. This is one area where WebGL really shines---you can just send someone a link to a page with WebGL and Javascript on it and it will render right there in their browser without any futzing around. On the other hand, I've seen a lot of videos and animated gifs on Tumblr that are fairly high quality, so I thought that perhaps I could figure out one of these techniques and share my animations this way.

As it turns out, this is surprisingly difficult.

Animated GIFs

I've seen a lot of awesome animations on Tumblr using animated GIFs. For instance, this animation and this animation are pretty good in my mind. After I looked into this more, I realized that these images are very carefully crafted to look as good as possible given the limitations of GIF, and that this is a really bad solution for general purpose videos.

The most important limitation of GIF is that it's limited to a color palette of 256 colors. This means that for images with fine color gradients you need to use dithering which looks bad and is kind of hard to do anyway. It is possible in GIF to use what is called a "local color table" to provide a different 256 color palette for each frame in the animation, so in a global sense you can use more than 256 colors, but within each frame pixels are represented by 8 bits each and therefore you're limited to 256 colors.

Besides the color limitation, generating animated GIFs is pretty difficult. I was pleased at first to find GIFLIB, but if you look at the actual API it gives you it's incredibly low level and difficult to use. For instance, it can't take raw RGB data and generate a GIF automatically: it's your job to generate the color palette, dither the input data, and write out the raw frame data.

There are a few other libraries out there for working with GIF images, but the

main alternative, and I suspect what most people are using, seems to be

ImageMagick/GraphicsMagick.

What you would do in this model is generate a bunch of raw image frames and then

stitch them together into an animation using the convert command. There are

some great documents on how to do this, for instance

this basic animation guide and

this optimized animation guide.

However, once I really started looking into this it started seeming rather

complicated, slow, and weird.

The other thing that I realized is that the good looking GIF animations I was seeing on Tumblr are mostly images that are carefully stitched together in a loop. For instance, if you look at the images I posted previously on a site like GIF Explode you'll see that there's only a small number of frames in the animation (20-30 or so). This is a lot different from posting a 10 second animation at 24fps which will be 240 frames, potentially each with their own local color table.

As a result of these limitations, I decided to abandon the GIF approach. If I do any animations that can be represented as short loops I will probably revisit this approach.

HTML5 Video

The other option I explored was generating an mp4 video using the

ffmpeg command line tool. This is an

attractive option because it's really easy to do. Basically what you do is call

glReadPixels() to read your raw RGB data into a buffer, and then send those

pixels over a Unix pipe to the ffmpeg process. When you invoke ffmpeg you give

it a few options to tell it about the image format, color space, and dimensions

of the input data. You also have to tell it to vertically flip the data (since

it has the opposite convention of OpenGL here). The actual invocation in the C++

code ends up looking something like:

FILE *ffmpeg = popen("/usr/bin/ffmpeg -vcodec rawvideo -f rawvideo -pix_fmt rgb24 -s 640x480 -i pipe:0 -vf vflip -vcodec h264 -r 60 out.avi", "w");

Then data can be sent to the file object in the usual way using fwrite(3) to

send over the raw image data. After the pipe is closed the ffmpeg process will

quit, and there will be a file out.avi with the encoded video.

This actually generates an output file that looks pretty decent (although the colors end up looking washed out due to some problem related to linear RGB vs. sRGB that I haven't figured out). There are definitely noticeable video encoding artifacts compared to the actual OpenGL animation, but it doesn't seem too unreasonable.

The problem here is that when I upload a video like this to Tumblr the video ends up getting re-encoded, and then on the Tumblr pages it's resized yet again in the browser and the animation looks really bad.

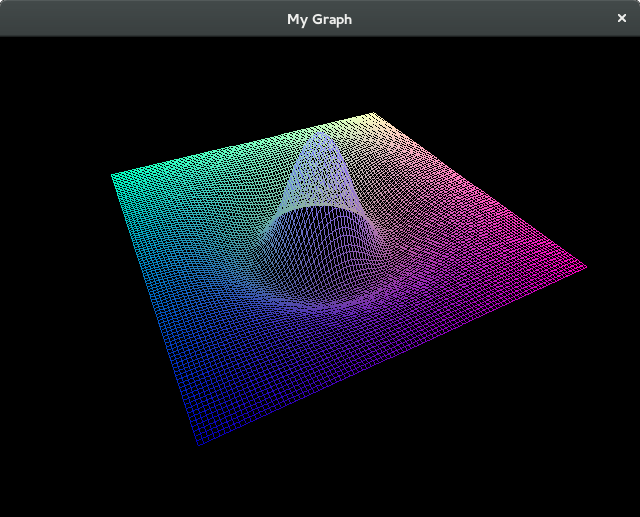

In the actual animation, a frame will look like this:

When I encode the video and send it up to Tumblr, it ends up looking like like this. If you look at the source mp4 file on Tumblr it's definitely a lot worse than the reference image above, but it's not as bad as the way it's rendered on the Tumblr post page.

I may end up sticking with this technique and just hosting the files myself, since my source videos are of good enough quality (better than the re-encoded source mp4 file above), and I don't actually have that many visitors to this site so the bandwidth costs aren't a real issue.

tl;dr Posting high-quality OpenGL animations to the internet is hard to do, and I'm still trying to figure out the best solution.